Voiceflow Multi-modal Projects

Juan Carlos Quintero, Founder

Voiceflow Multi-modal projects are finally here! 🎉

This week, the Voiceflow team released the long-anticipated feature to enable multi-modal projects

Let's talk about what this means and how it can be used

Multi-Modal Projects

Up until this week, when you were creating a new project on Voiceflow, you had to choose between a Chat or Voice project

If you know the company long enough, you know they started with Voice assistants (it's even in the name of the company)

You could build Alexa skills, Google actions, etc

But with the rise of LLMs, ChatGPT, and the whole AI explotion, Voiceflow switched gears to support Chat assistants as well

It was a great decision in my opinion. The product was mature enough thanks to all the infrastructure they built and the lessons they had from building a platform for voice assistants

With this release, they have removed the distinction between voice and chat assistants, so you can use audio inputs and outputs on your agents

Now, let's quickly talk about how it works and where to find things

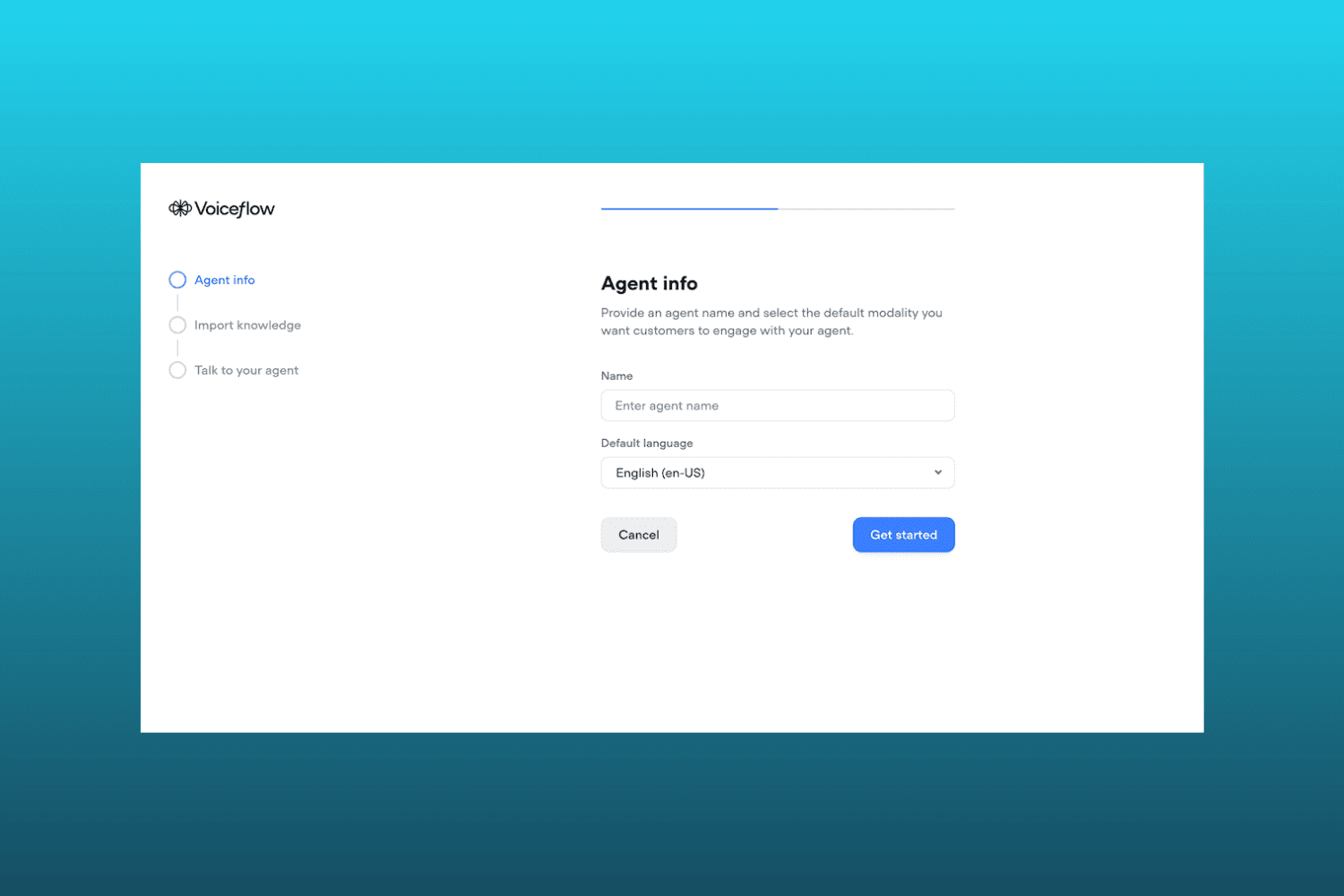

New Onboarding

When you are creating a new project, you no longer have to choose between a Chat or Voice project, just add the name of your Agent, connect a Knowledge Base, and you're good to go

By default, the agent will have audio capabilities, so you don't need to do anything else, besides tweaking some settings

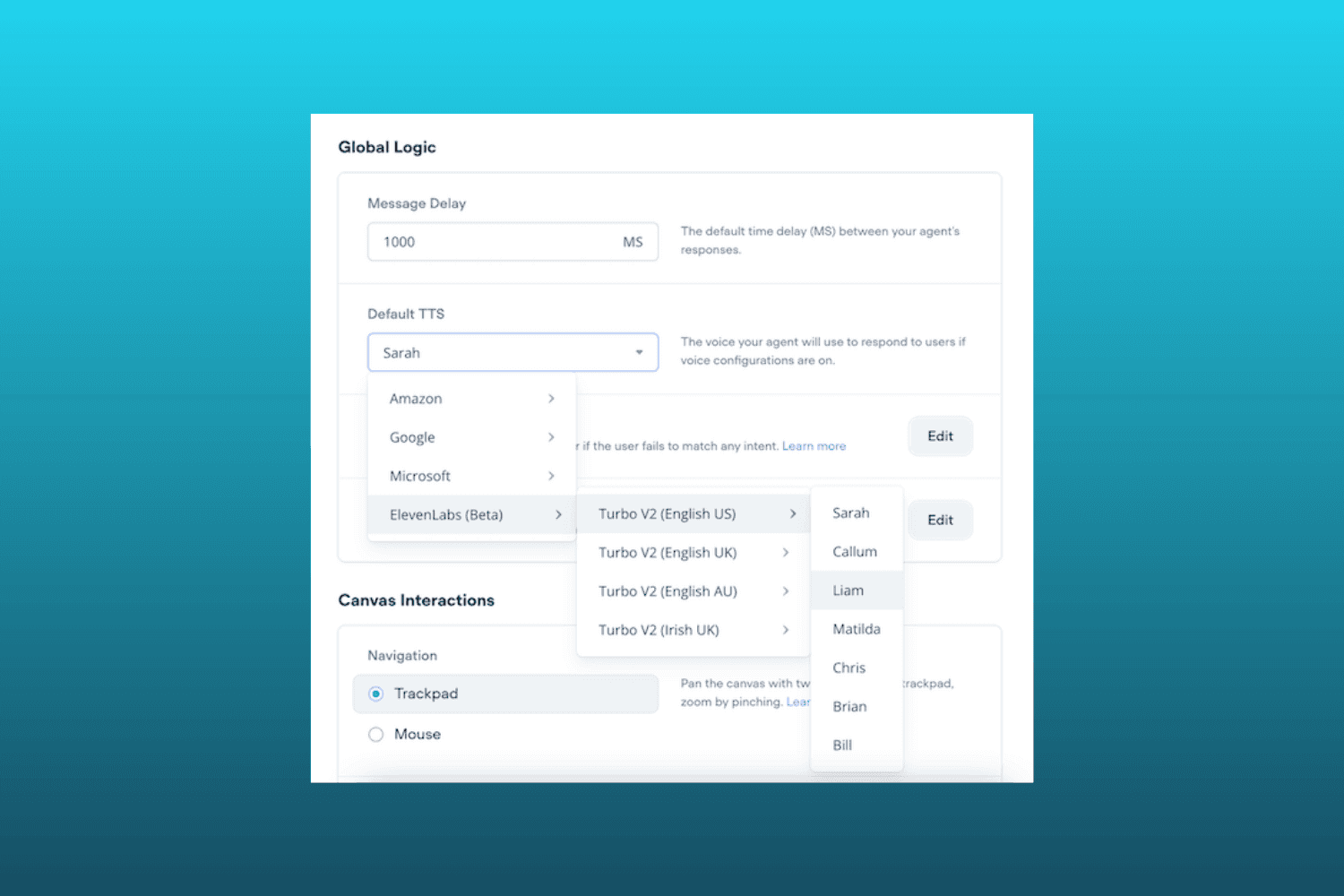

TTS Setting

You can select which TTS (Text-to-Speech) voice you want for your responses.

You can choose between different options incluging Amazon, Google, Microsoft, and ElevenLabs (in beta)

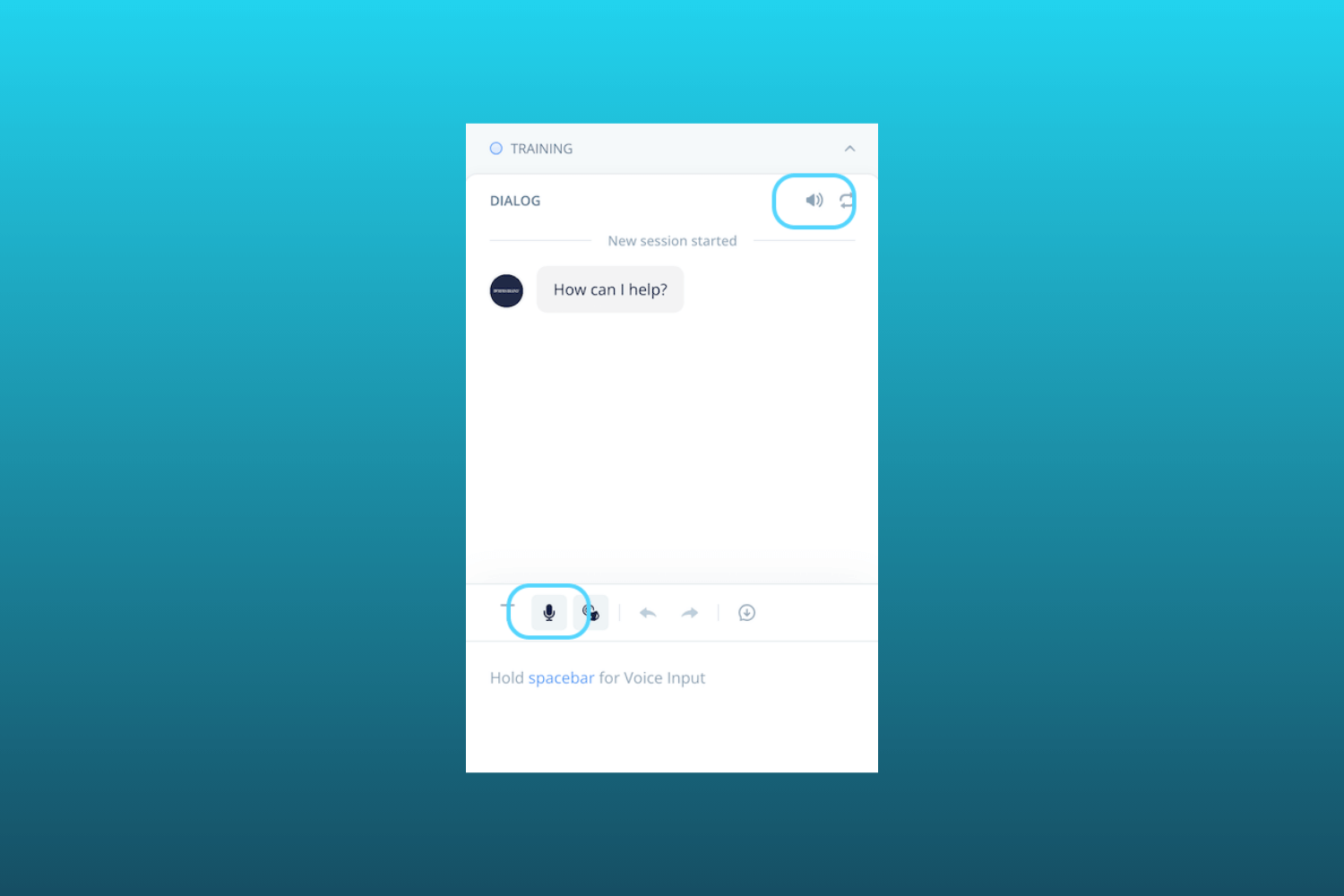

Testing Tool

To enable easier testing, you can use audio inputs and outputs on the prototyping tool without needing to publish the agent or anything

To enable audio inputs, you need to click on the Voice button in the inputs section and accept the browser's permission to use your mic

Then, you just click the Voice button anytime you want to use audio inputs

To enable audio outputs, you need to click on the top bar of the chat window and you will start hearing the agent's responses

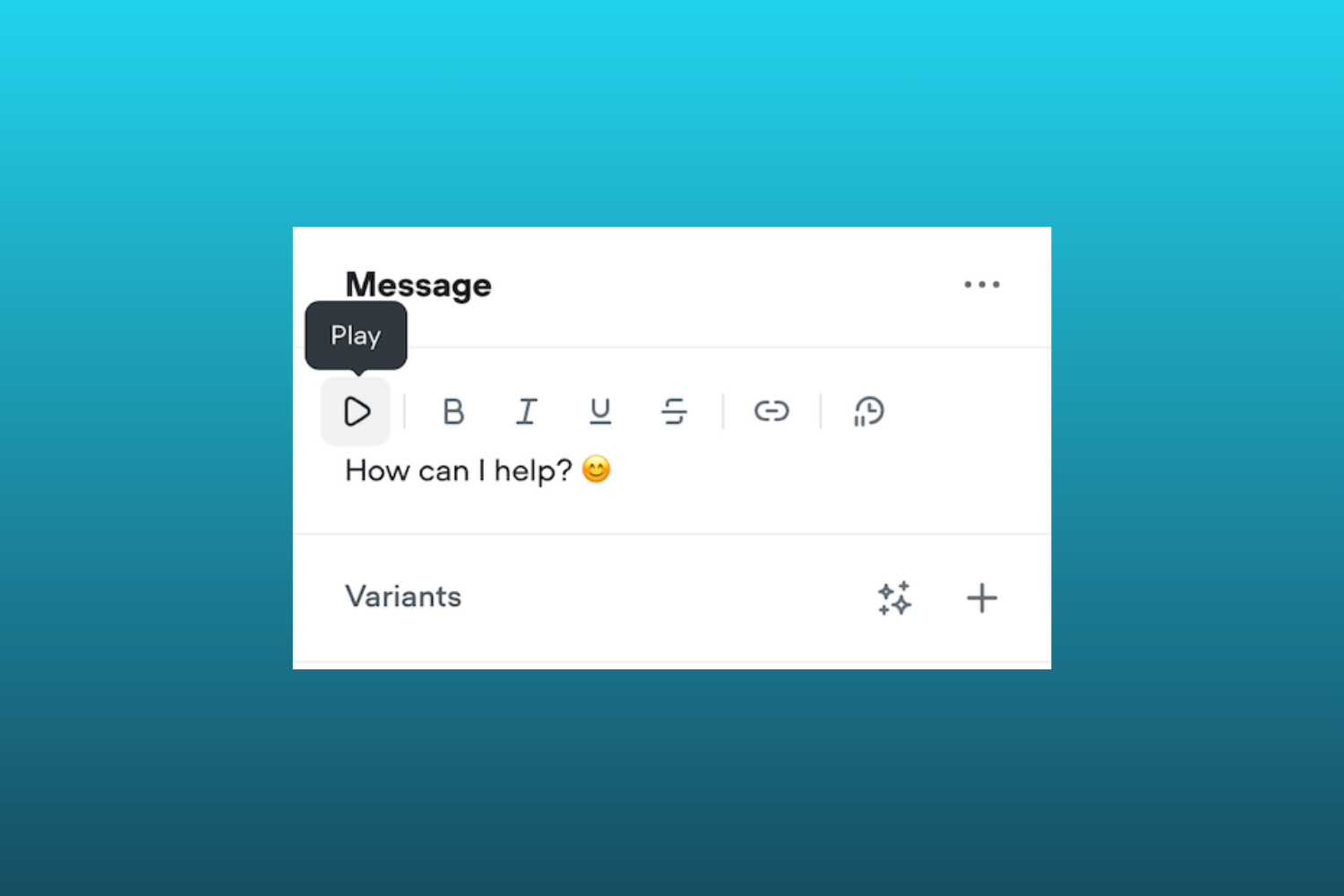

You can also play audios inside the Message step

Web Chat

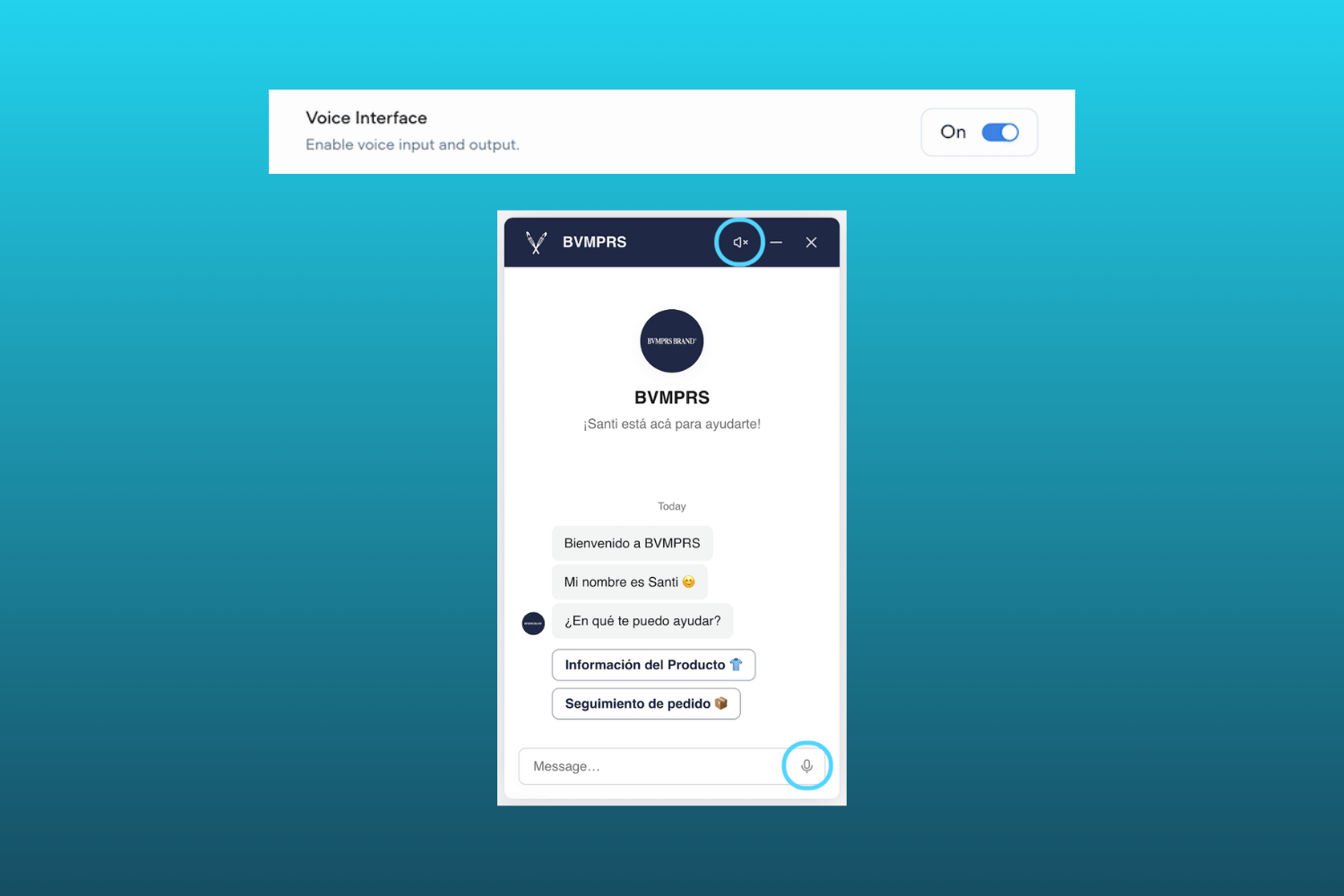

What I like the most about this release is the audio input option for users. This will give them more flexibility and convenience when interacting with our agents

To enable audio inputs on the Web chat, you need to go to integrations, find the Voice toggle and switch it on

Then, your users will see a mic icon on the input field they can use to start talking

As for audio outputs, users need to unmute de chat on the audio icon on the top bar of the chat window

Existing Chat Projects

Existing chat projects can benefit from this release since it's now all integrated natively inside each project

Summary

With multi-modal projects, having audio and chat interactions in the same agent is now possible

Your users will have more flexbility and convenience when interacting with your assistant

One thing to mention is that audio inputs won't be recorded. This is a feature to enable TTS (Text-to-Speech) and STT (Speech-to-Text) capabilities for your agents

Here are the official release notes

P.S.: Want to earn free 2MM AI Tokens on Voiceflow 🎁? Sign up using this link!